Sequential Preference Ranking for Efficient Reinforcement Learning from Human Feedback

Abstract

Reinforcement learning from human feedback (RLHF) alleviates the problem of designing a task-specific reward function in reinforcement learning by learning it from human preference. However, existing RLHF models are considered inefficient as they produce only a single preference data from each human feedback. To tackle this problem, we propose a novel RLHF framework called SeqRank, that uses sequential preference ranking to enhance the feedback efficiency. Our method samples trajectories in a sequential manner by iteratively selecting a defender from the set of previously chosen trajectories K and a challenger from the set of unchosen trajectories U \ K, where U is the replay buffer. We propose two trajectory comparison methods with different defender sampling strategies: (1) sequential pairwise comparison that selects the most recent trajectory and (2) root pairwise comparison that selects the most preferred trajectory from K. We construct a data structure and rank trajectories by preference to augment additional queries. The proposed method results in at least 39.2% higher average feedback efficiency than the baseline and also achieves a balance between feedback efficiency and data dependency. We examine the convergence of the empirical risk and the generalization bound of the reward model with Rademacher complexity. While both trajectory comparison methods outperform conventional pairwise comparison, root pairwise comparison improves the average reward in locomotion tasks and the average success rate in manipulation tasks by 29.0% and 25.0%, respectively.

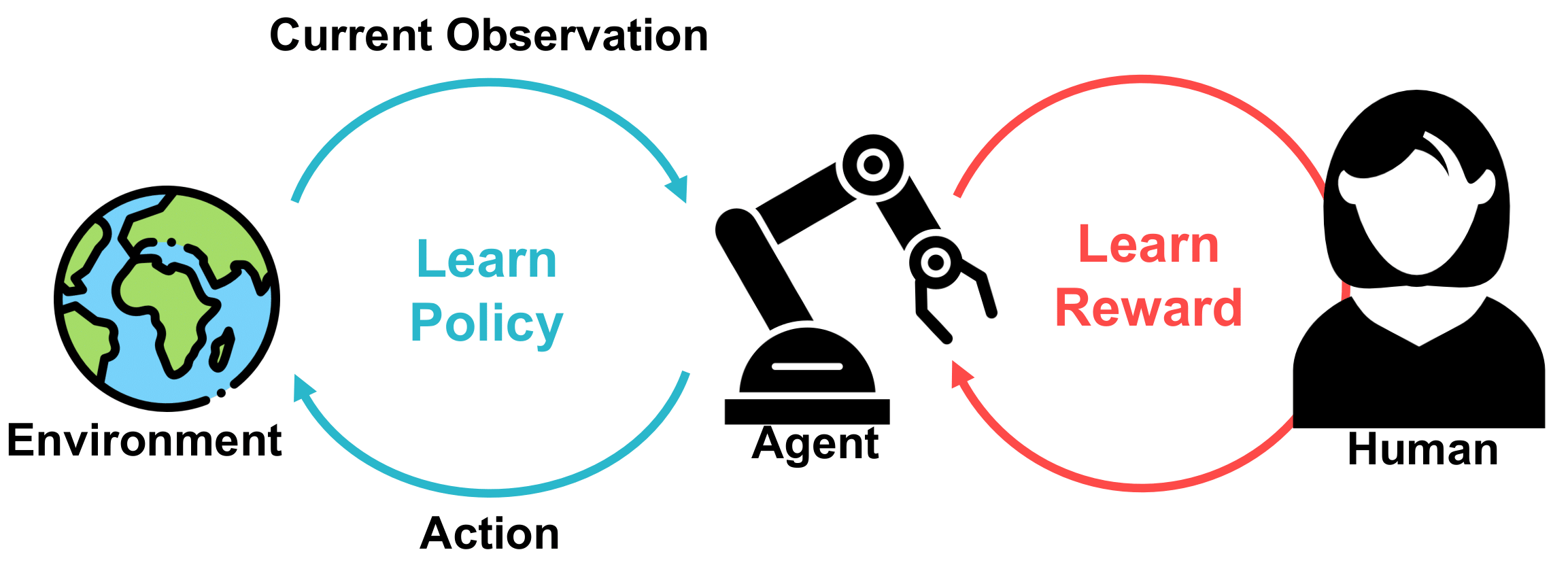

Reinforcement Learning from Human Feedback (RLHF)

Reinforcement learning from human feedback (RLHF) directly learns from human preferences without the need for a hand-crafted reward function.

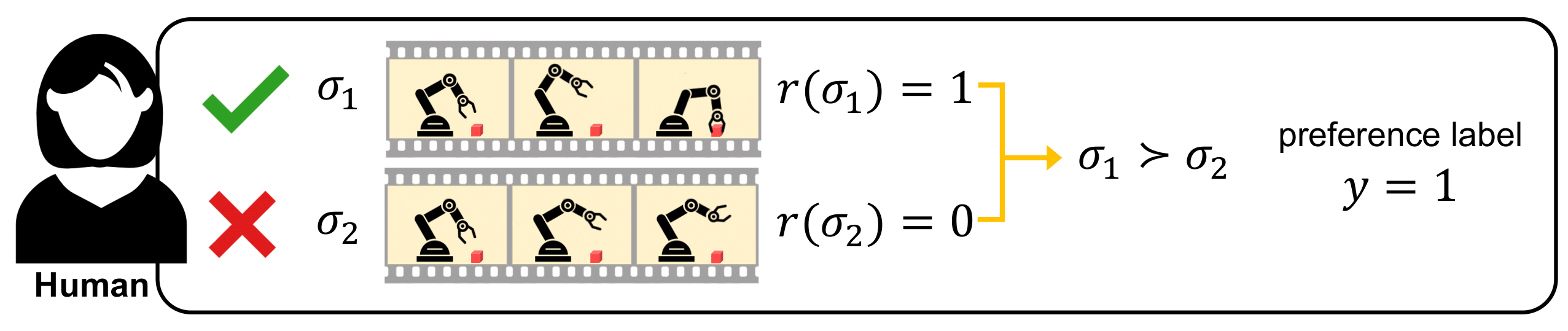

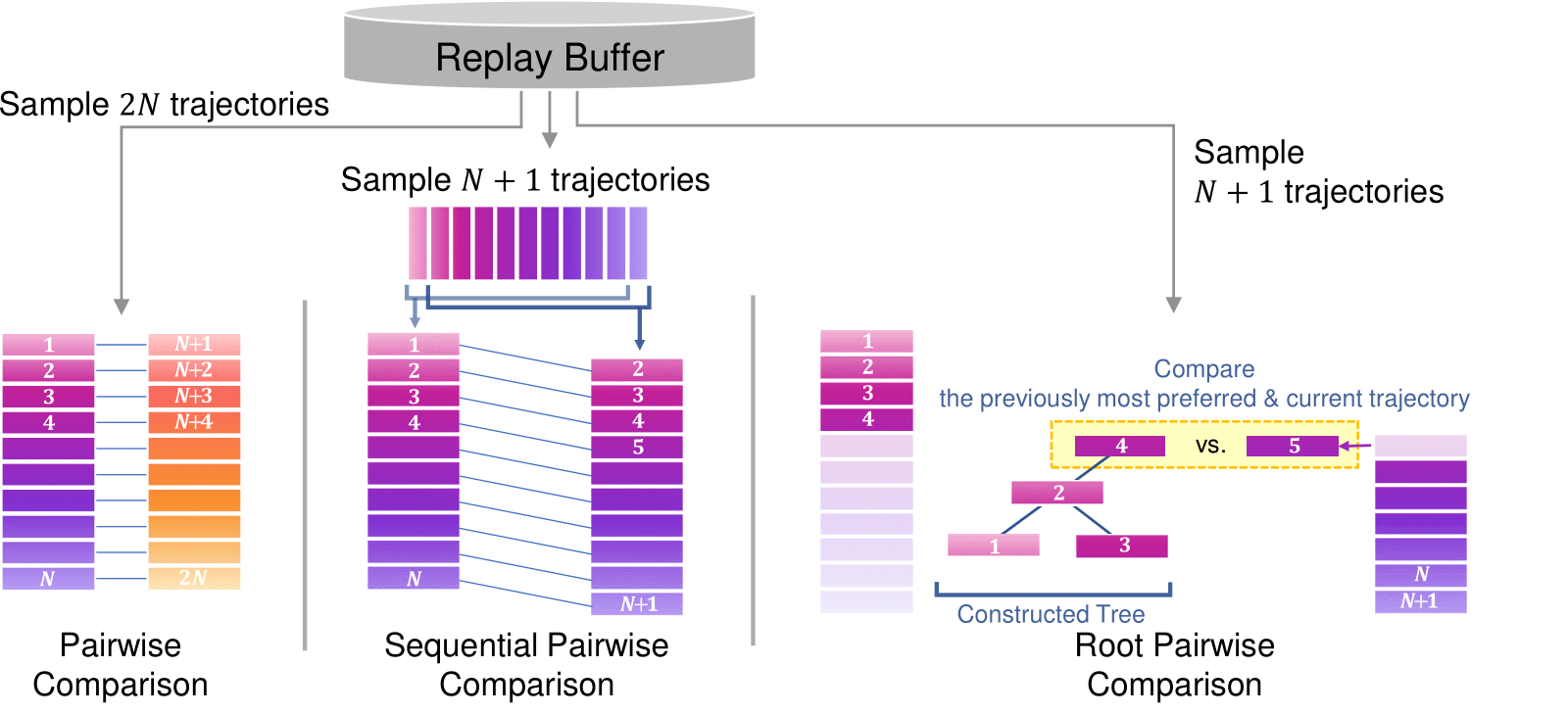

A conventional way to learn a reward function in RLHF is pairwise comparison.

Using pairwise comparison, the agent queries a human to compare two different trajectories. The feedback efficiency is also fixed as a standardized level, 1.

SeqRank

We propose a novel RLHF framework called SeqRank. Our method uses sequential preference ranking to enhance the feedback efficiency and reduce human’s labeling effort.

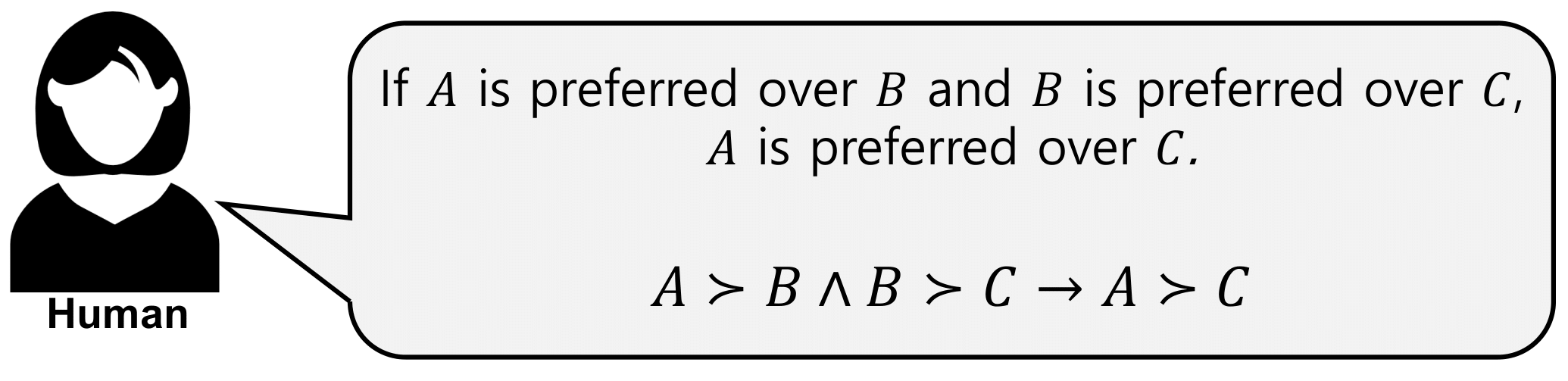

The key idea of our approach is to utilize the preference relationships of the previous trajectory pairs. Bringing the nature of transitivity in human preferences, we can augment preference data.

Our method samples trajectories in a sequential manner by iteratively selecting a defender from the set of previously chosen trajectories K and a challenger from the set of unchosen trajectories U \ K. Specifically, we propose two trajectory comparison methods with different defender sampling strategies:

- Sequential Pairwise Comparison: defender = most recently sampled trajectory

- Root Pairwise Comparison: defender = previously most preferred trajectory

Sequential Pairwise Comparison

Sequential pairwise comparison selects the most recently sampled trajectory as the defender.

Root Pairwise Comparison

Root pairwise comparison selects the previously most preferred trajectory as the defender.

Both sequential and root pairwise comparison can augment additional preference data due to transitivity.

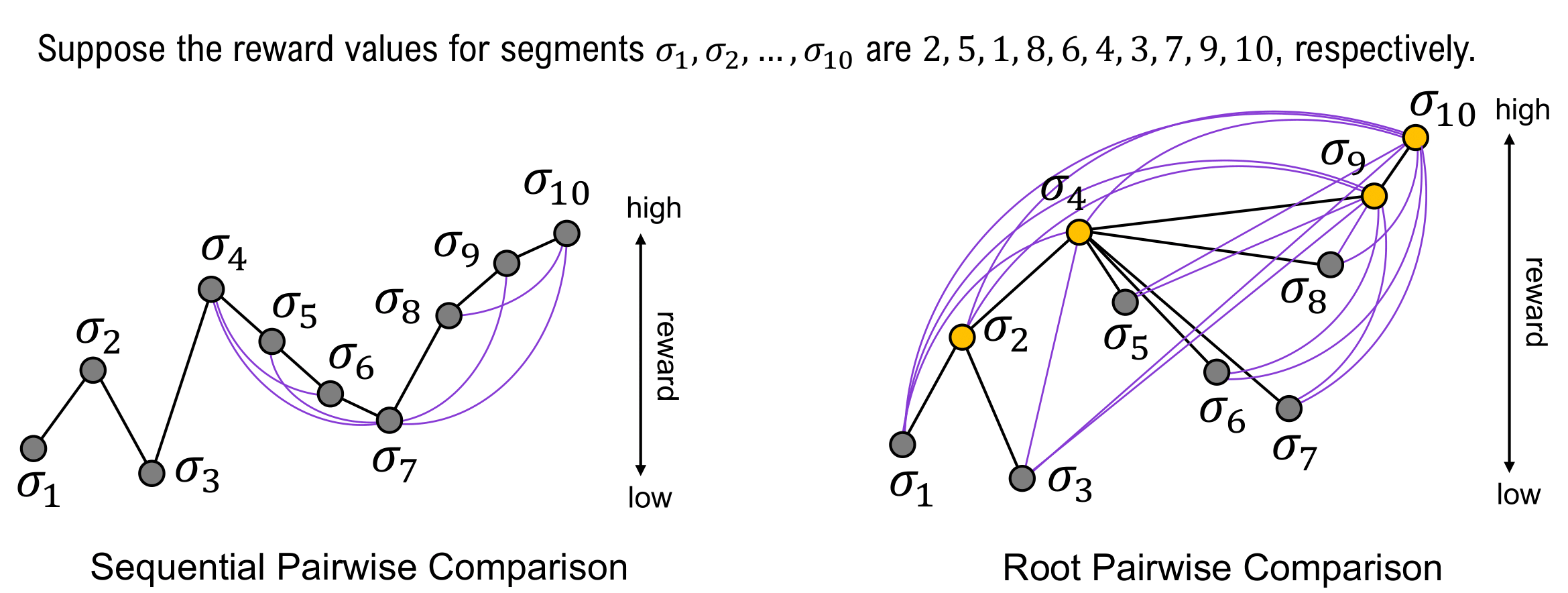

Toy Example

Suppose the reward values for segments σ_1, σ_2, …, σ_10 are 2, 5, 1, 8, 6, 4, 3, 7, 9, 10, respectively. Then, we can construct a graph for each trajectory comparison method.

Black lines indicate actual pairs that receive true preference labels from human feedback. Purple lines describe augmented labels for non-adjacent pairs.

Theoretical Analyses

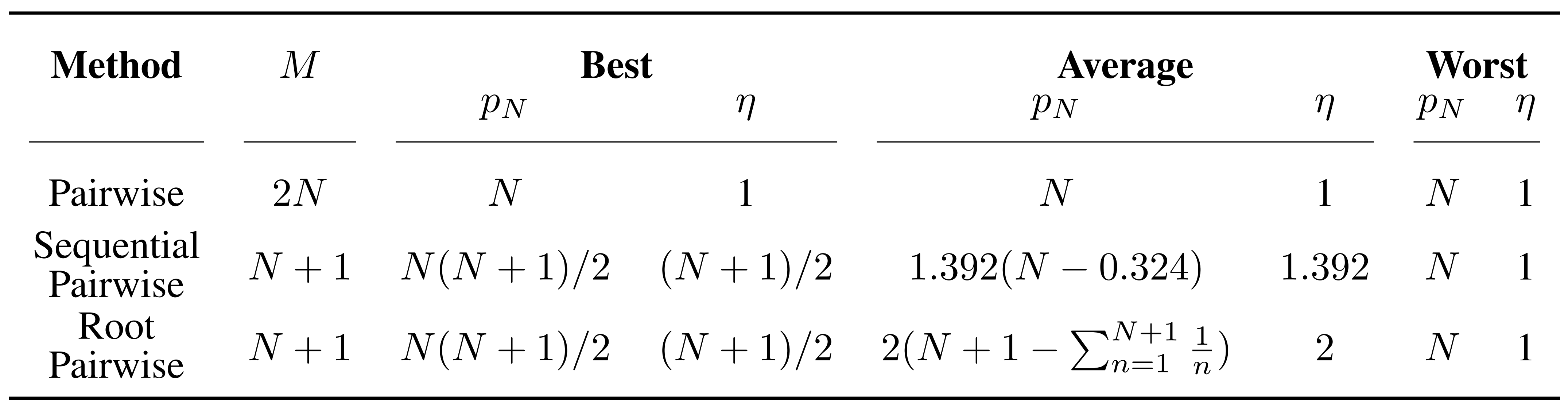

We prove that sequential and root pairwise comparison show 39.2% and 100% higher average feedback efficiency compared to conventional pairwise comparison.

Trade-Off between Feedback Efficiency and Data Dependency

We show that the convergence rate of the empirical risk is in the order of root > sequential > pairwise comparison. On the other hand, the convergence rate of the generalization bound is in the order of root = pairwise > sequential comparison.

Results

Simulation Experiments

We show that the overall performance in DMControl is in the order of root, sequential, and pairwise comparison.

DMControl - Quadruped Walk

In the example trajectories in the quadruped walk task, the agent trained using pairwise comparison fails to turn its body upside down.

DMControl - Walker Walk

In the example trajectories in the walker walk task, the agent trained using root pairwise comparison shows the fastest and most stable gait.

MetaWorld - Drawer Open - Scenario I

In the first scenario in the drawer open task, the agent trained using pairwise comparison fails to open the drawer.

MetaWorld - Drawer Open - Scenario II

In the second scenario in the drawer open task, all agents open the drawer, but the agents trained using pairwise and sequential pairwise comparison are unstable because their end effectors oscillate with a large and small amplitude, respectively.

MetaWorld - Window Open

In the example trajectories in the window open task, only the agent trained using root pairwise comparison succeeds in opening the window.

MetaWorld - Hammer

In the example trajectories in the hammer task, agents trained using sequential and root pairwise comparison succeed in driving a nail into the wooden box.

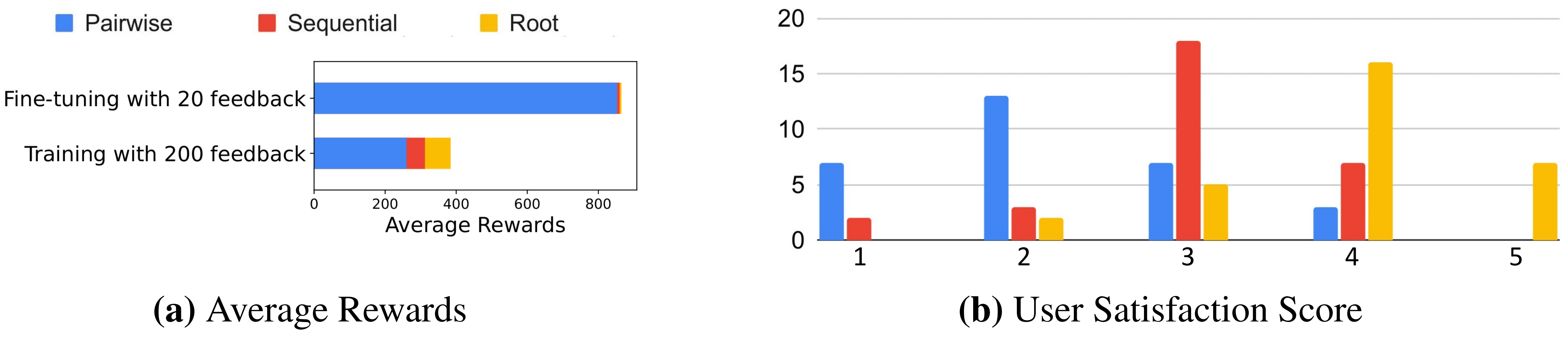

Real Human Feedback Experiments

We conduct experiments with real human feedback to compare the user stress level for each method. Each participant trained a cheetah to run as fast as it can.

Pairwise Comparison (Baseline)

Sequential Pairwise Comparison (Ours)

Root Pairwise Comparison (Ours)

Root pairwise comparison is the least burdensome for real human users while achieving the highest performance.

After the experiments end, the participants took a survey. Participants responed that the user satisfaction scores are 2.20 (pairwise), 3.00 (sequential), and 3.93 (root). The most significant preference criterion was the overall moved distance of the agent.

Real Robot Experiments - Block Placing

To demonstrate our method in real-world environments, we conduct a block placing task using a real UR-5 robot.

BibTeX

@inproceedings{hwang2023seqrank,

author = {Hwang, Minyoung and Lee, Gunmin and Kee, Hogun and Kim, Chan Woo and Lee, Kyungjae and Oh, Songhwai},

title = {Sequential Preference Ranking for Efficient Reinforcement Learning from Human Feedback},

booktitle = {Neural Information Processing Systems Track on Datasets and Benchmarks (NeurIPS)},

month = {December},

year = {2023},

}